欢迎大家赞助一杯啤酒🍺 我们准备了下酒菜:Formal mathematics/Isabelle/ML, Formal verification/Coq/ACL2, C++/F#/Lisp

Apache Hadoop

Hadoop是一个软件平台,可以让你很容易地开发和运行处理海量数据的应用……Hadoop是MapReduce 的实现,它使用了Hadoop分布式文件系统(HDFS)。MapReduce将应用切分为许多小任务块去执行。出于保证可靠性的考虑,HDFS会为数据块创建多个副本,并放置在群的计算节点中,MapReduce就在数据副本存放的地方进行处理

对于一个大文件,hadoop把它切割成一个个大小为64Mblock。这些block是以普通文件的形式存储在各个节点上的。 默认情况下,每个block都会有3个副本。通过此种方式,来达到数据安全。就算一台机器down掉,系统能够检测,自动选择一个新的节点复制一份。

在hadoop中,有一个master node和多个data node。客户端执行查询之类的操作,只需与master node(也就是平时所说的元数据服务器)交互,获得需要的文件操作信息,然后与data node通信,进行实际数据的传输。

master(比如down掉)在启动时,通过重新执行原先的操作来构建文件系统的结构树。由于结构树是在内存中直接存在的,因此查询操作效率很高

核心:Hadoop Distributed File System

HBase: Bigtable-like structured storage for Hadoop HDFS

目录 |

新闻

| |

您可以在Wikipedia上了解到此条目的英文信息 Apache Hadoop Thanks, Wikipedia. |

自http://developer.yahoo.com/blogs/hadoop/feed/rss2/加载RSS失败或RSS源被墙 自http://www.cloudera.com/feed/加载RSS失败或RSS源被墙

Quick Start

$ mkdir input $ cp conf/*.xml input $ bin/hadoop jar hadoop-*-examples.jar grep input output 'dfs[a-z.]+' $ cat output/*

Use the following conf/hadoop-site.xml:

<configuration>

<property>

<name>fs.default.name</name>

<value>localhost:9000</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

Now check that you can ssh to the localhost without a passphrase:

$ ssh localhost

If you cannot ssh to localhost without a passphrase, execute the following commands:

$ ssh-keygen -t dsa -P -f ~/.ssh/id_dsa $ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

Format a new distributed-filesystem:

$ bin/hadoop namenode -format .... 08/03/19 11:15:41 INFO dfs.Storage: Storage directory /tmp/hadoop-allen/dfs/name has been successfully formatted.

Start The hadoop daemons:

$ bin/start-all.sh

Browse the web-interface for the NameNode and the JobTracker, by default they are available at:

NameNode - http://localhost:50070/ JobTracker - http://localhost:50030/

Copy the input files into the distributed filesystem:

$ bin/hadoop dfs -put conf input

Run some of the examples provided:

$ bin/hadoop jar hadoop-*-examples.jar grep input output 'dfs[a-z.]+'

Examine the output files:

Copy the output files from the distributed filesystem to the local filesytem and examine them:

$ bin/hadoop dfs -get output output $ cat output/*

or View the output files on the distributed filesystem:

$ bin/hadoop dfs -cat output/*

When you're done, stop the daemons with:

$ bin/stop-all.sh

Tutorial

- Writing An Hadoop MapReduce Program In Python

- Running Hadoop On Ubuntu Linux (Single-Node Cluster)

- Running Hadoop On Ubuntu Linux (Multi-Node Cluster)

Python

Developer

Yahoo

Yahoo! Distribution of Hadoop http://developer.yahoo.com/hadoop/

Powered By

We use Hadoop to store copies of internal log and dimension data sources and use it as a source for reporting/analytics and machine learning.Currently have around a hundred machines - low end commodity boxes with about 1.5TB of storage each. Our data sets are currently are of the order of 10s of TB and we routine process multiple TBs of data everyday.In the process of adding a 320 machine cluster with 2,560 cores and about 1.3 PB raw storage. Each (commodity) node will have 8 cores and 4 TB of storage.We are heavy users of both streaming as well as the Java apis. We have built a higher level data warehousing framework using these features (that we will open source at some point). We have also written a read-only FUSE implementation over hdfs.

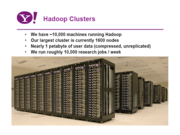

>5000 nodes running Hadoop as of July 2007, biggest cluster: 2000 nodes (2*4cpu boxes w 3TB disk each), Used to support research for Ad Systems and Web Search. Also used to do scaling tests to support development of Hadoop on larger clusters

25 node cluster (dual xeon LV, 1TB/node storage), Used for charts calculation and web log analysis

up to 400 instances on Amazon EC2, data storage in Amazon S3

Using Hadoop to process apache log, analyzing user's action and click flow and the links click with any specified page in site and more. Using Hadoop to process whole price data user input with map/reduce.

More: http://wiki.apache.org/hadoop/PoweredBy

图集

链接

- 华盛顿大学也从那时开始了一个以Hadoop为基础的分布式计算的课程

- http://hadoop.apache.org/

- http://wiki.apache.org/hadoop/

- Yahoo's Hadoop Support

- 类似Google构架的开源项目Hadoop近获社区关注

- Structure Big Data揭示Hadoop未来:DataStax Brisk,EMC和MapReduce

- 用Hadoop搭建分布式存储和分布式运算集群

- Hadoop to run on EC2

- Run Your Own Google Style Computing Cluster with Hadoop and Amazon EC2

- Hadoop文档

- Hadoop下载

商业

<discussion>characters_max=300</discussion>